Charge-coupled device

A Charge coupled device (CCD) is a device for the movement of electrical charge, usually from within the device to an area where the charge can be manipulated, for example conversion into a digital value. This is achieved by "shifting" the signals between stages within the device one at a time. CCDs move charge between capacitive bins in the device, with the shift allowing for the transfer of charge between bins.

Often the device is integrated with an image sensor, such as a photoelectric device to produce the charge that is being read, thus making the CCD a major technology for digital imaging. Although CCDs are not the only technology to allow for light detection, CCDs are widely used in professional, medical, and scientific applications where high-quality image data are required.

Contents |

History

The charge-coupled device was invented in 1969 at AT&T Bell Labs by Willard Boyle and George E. Smith. The lab was working on semiconductor bubble memory when Boyle and Smith conceived of the design of what they termed, in their notebook, "Charge 'Bubble' Devices".[1] A description of how the device could be used as a shift register and as a linear and area imaging devices was described in this first entry. The essence of the design was the ability to transfer charge along the surface of a semiconductor from one storage capacitor to the next.

The initial paper describing the concept[2] listed possible uses as a memory, a delay line, and an imaging device. The first experimental device[3] demonstrating the principle was a row of closely spaced metal squares on an oxidized silicon surface electrically accessed by wire bonds.

The first working CCD made with integrated circuit technology was a simple 8-bit shift register.[4] This device had input and output circuits and was used to demonstrate use as a shift register and as a crude eight pixel linear imaging device. Development of the device progressed at a rapid rate. By 1971, Bell researchers Michael F. Tompsett et al. were able to capture images with simple linear devices.[5]

Several companies, including Fairchild Semiconductor, RCA and Texas Instruments, picked up on the invention and began development programs. Fairchild's effort, led by ex-Bell researcher Gil Amelio, was the first with commercial devices, and by 1974 had a linear 500-element device and a 2-D 100 x 100 pixel device. Under the leadership of Kazuo Iwama, Sony also started a big development effort on CCDs involving a significant investment. Eventually, Sony managed to mass produce CCDs for their camcorders. Before this happened, Iwama died in August 1982. Subsequently, a CCD chip was placed on his tombstone to acknowledge his contribution.[6]

In January 2006, Boyle and Smith were awarded the National Academy of Engineering Charles Stark Draper Prize,[7] and in 2009 they were awarded the Nobel Prize for Physics,[8] for their work on the CCD.

Basics of operation

In a CCD for capturing images, there is a photoactive region (an epitaxial layer of silicon), and a transmission region made out of a shift register (the CCD, properly speaking).

An image is projected through a lens onto the capacitor array (the photoactive region), causing each capacitor to accumulate an electric charge proportional to the light intensity at that location. A one-dimensional array, used in line-scan cameras, captures a single slice of the image, while a two-dimensional array, used in video and still cameras, captures a two-dimensional picture corresponding to the scene projected onto the focal plane of the sensor. Once the array has been exposed to the image, a control circuit causes each capacitor to transfer its contents to its neighbor (operating as a shift register). The last capacitor in the array dumps its charge into a charge amplifier, which converts the charge into a voltage. By repeating this process, the controlling circuit converts the entire contents of the array in the semiconductor to a sequence of voltages. In a digital device, these voltages are then sampled, digitized, and usually stored in memory; in an analog device (such as an analog video camera), they are processed into a continuous analog signal (e.g. by feeding the output of the charge amplifier into a low-pass filter) which is then processed and fed out to other circuits for transmission, recording, or other processing.

Detailed physics of operation

The photoactive region of the CCD is, generally, an epitaxial layer of silicon. It has a doping of p+ (Boron) and is grown upon a substrate material, often p++. In buried channel devices, the type of design utilized in most modern CCDs, certain areas of the surface of the silicon are ion implanted with phosphorus, giving them an n-doped designation. This region defines the channel in which the photogenerated charge packets will travel. The gate oxide, i.e. the capacitor dielectric, is grown on top of the epitaxial layer and substrate. Later on in the process polysilicon gates are deposited by chemical vapor deposition, patterned with photolithography, and etched in such a way that the separately phased gates lie perpendicular to the channels. The channels are further defined by utilization of the LOCOS process to produce the channel stop region. Channel stops are thermally grown oxides that serve to isolate the charge packets in one column from those in another. These channel stops are produced before the polysilicon gates are, as the LOCOS process utilizes a high temperature step that would destroy the gate material. The channels stops are parallel to, and exclusive of, the channel, or "charge carrying", regions. Channel stops often have a p+ doped region underlying them, providing a further barrier to the electrons in the charge packets (this discussion of the physics of CCD devices assumes an electron transfer device, though hole transfer, is possible).

One should note that the clocking of the gates, alternately high and low, will forward and reverse bias to the diode that is provided by the buried channel (n-doped) and the epitaxial layer (p-doped). This will cause the CCD to deplete, near the p-n junction and will collect and move the charge packets beneath the gates—and within the channels—of the device.

CCD manufacturing and operation can be optimized for different uses. The above process describes a frame transfer CCD. While CCDs may be manufactured on a heavily doped p++ wafer it is also possible to manufacture a device inside p-wells that have been placed on an n-wafer. This second method, reportedly, reduces smear, dark current, and infrared and red response. This method of manufacture is used in the construction of interline transfer devices.

Another version of CCD is called a peristaltic CCD. In a peristaltic charge-coupled device, the charge packet transfer operation is analogous to the peristaltic contraction and dilation of the digestive system. The peristaltic CCD has an additional implant that keeps the charge away from the silicon/silicon dioxide interface and generates a large lateral electric field from one gate to the next. This provides an additional driving force to aid in transfer of the charge packets.

Architecture

The CCD image sensors can be implemented in several different architectures. The most common are full-frame, frame-transfer, and interline. The distinguishing characteristic of each of these architectures is their approach to the problem of shuttering.

In a full-frame device, all of the image area is active, and there is no electronic shutter. A mechanical shutter must be added to this type of sensor or the image smears as the device is clocked or read out.

With a frame-transfer CCD, half of the silicon area is covered by an opaque mask (typically aluminum). The image can be quickly transferred from the image area to the opaque area or storage region with acceptable smear of a few percent. That image can then be read out slowly from the storage region while a new image is integrating or exposing in the active area. Frame-transfer devices typically do not require a mechanical shutter and were a common architecture for early solid-state broadcast cameras. The downside to the frame-transfer architecture is that it requires twice the silicon real estate of an equivalent full-frame device; hence, it costs roughly twice as much.

The interline architecture extends this concept one step further and masks every other column of the image sensor for storage. In this device, only one pixel shift has to occur to transfer from image area to storage area; thus, shutter times can be less than a microsecond and smear is essentially eliminated. The advantage is not free, however, as the imaging area is now covered by opaque strips dropping the fill factor to approximately 50 percent and the effective quantum efficiency by an equivalent amount. Modern designs have addressed this deleterious characteristic by adding microlenses on the surface of the device to direct light away from the opaque regions and on the active area. Microlenses can bring the fill factor back up to 90 percent or more depending on pixel size and the overall system's optical design.

The choice of architecture comes down to one of utility. If the application cannot tolerate an expensive, failure-prone, power-intensive mechanical shutter, an interline device is the right choice. Consumer snap-shot cameras have used interline devices. On the other hand, for those applications that require the best possible light collection and issues of money, power and time are less important, the full-frame device is the right choice. Astronomers tend to prefer full-frame devices. The frame-transfer falls in between and was a common choice before the fill-factor issue of interline devices was addressed. Today, frame-transfer is usually chosen when an interline architecture is not available, such as in a back-illuminated device.

CCDs containing grids of pixels are used in digital cameras, optical scanners, and video cameras as light-sensing devices. They commonly respond to 70 percent of the incident light (meaning a quantum efficiency of about 70 percent) making them far more efficient than photographic film, which captures only about 2 percent of the incident light.

Most common types of CCDs are sensitive to near-infrared light, which allows infrared photography, night-vision devices, and zero lux (or near zero lux) video-recording/photography. For normal silicon-based detectors, the sensitivity is limited to 1.1 μm. One other consequence of their sensitivity to infrared is that infrared from remote controls often appears on CCD-based digital cameras or camcorders if they do not have infrared blockers.

Cooling reduces the array's dark current, improving the sensitivity of the CCD to low light intensities, even for ultraviolet and visible wavelengths. Professional observatories often cool their detectors with liquid nitrogen to reduce the dark current, and therefore the thermal noise, to negligible levels.

Use in astronomy

Due to the high quantum efficiencies of CCDs, linearity of their outputs (one count for one photon of light), ease of use compared to photographic plates, and a variety of other reasons, CCDs were very rapidly adopted by astronomers for nearly all UV-to-infrared applications. Thermal noise and cosmic rays may alter the pixels in the CCD array. To counter such effects, astronomers take several exposures with the CCD shutter closed and opened. The average of images taken with the shutter closed is necessary to lower the random noise. Once developed, the dark frame average image is then subtracted from the open-shutter image to remove the dark current and other systematic defects (dead pixels, hot pixels, etc.) in the CCD. The Hubble Space Telescope, in particular, has a highly developed series of steps (“data reduction pipeline”) to convert the raw CCD data to useful images. See the references for a more in-depth description of the steps in astronomical CCD image-data correction and processing.[9]

CCD cameras used in astrophotography often require sturdy mounts to cope with vibrations from wind and other sources, along with the tremendous weight of most imaging platforms. To take long exposures of galaxies and nebulae, many astronomers use a technique known as auto-guiding. Most autoguiders use a second CCD chip to monitor deviations during imaging. This chip can rapidly detect errors in tracking and command the mount motors to correct for them.

An interesting unusual astronomical application of CCDs, called drift-scanning, uses a CCD to make a fixed telescope behave like a tracking telescope and follow the motion of the sky. The charges in the CCD are transferred and read in a direction parallel to the motion of the sky, and at the same speed. In this way, the telescope can image a larger region of the sky than its normal field of view. The Sloan Digital Sky Survey is the most famous example of this, using the technique to produce the largest uniform survey of the sky yet accomplished.

In addition to astronomy, CCDs are also used in laboratory analytical instrumentation such as monochromators, spectrometers, and N-slit interferometers.

Color cameras

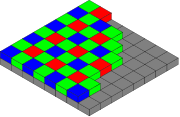

Digital color cameras generally use a Bayer mask over the CCD. Each square of four pixels has one filtered red, one blue, and two green (the human eye is more sensitive to green than either red or blue). The result of this is that luminance information is collected at every pixel, but the color resolution is lower than the luminance resolution.

Better color separation can be reached by three-CCD devices (3CCD) and a dichroic beam splitter prism, that splits the image into red, green and blue components. Each of the three CCDs is arranged to respond to a particular color. Most professional video camcorders, and some semi-professional camcorders, use this technique. Another advantage of 3CCD over a Bayer mask device is higher quantum efficiency (and therefore higher light sensitivity for a given aperture size). This is because in a 3CCD device most of the light entering the aperture is captured by a sensor, while a Bayer mask absorbs a high proportion (about 2/3) of the light falling on each CCD pixel.

For still scenes, for instance in microscopy, the resolution of a Bayer mask device can be enhanced by Microscanning technology. During the process of color co-site sampling, several frames of the scene are produced. Between acquisitions, the sensor is moved in pixel dimensions, so that each point in the visual field is acquired consecutively by elements of the mask that are sensitive to the red, green and blue components of its color. Eventually every pixel in the image has been scanned at least once in each color and the resolution of the three channels become equivalent (the resolutions of red and blue channels are quadrupled while the green channel is doubled).

Sensor sizes

Sensors (CCD / CMOS) are often referred to with an inch fraction designation such as 1/1.8" or 2/3" called the optical format. This measurement actually originates back in the 1950s and the time of Vidicon tubes. Compact digital cameras and Digicams typically have much smaller sensors than a digital SLR and are thus less sensitive to light and inherently more prone to noise. Some examples of the CCDs found in modern cameras can be found in this table in a Digital Photography Review article

|

|

|

mm |

mm |

mm |

mm2 |

|

|---|---|---|---|---|---|---|

| 1/6" | 4:3 | 2.300 | 1.730 | 2.878 | 3.979 | 1.000 |

| 1/4" | 4:3 | 3.200 | 2.400 | 4.000 | 7.680 | 1.930 |

| 1/3.6" | 4:3 | 4.000 | 3.000 | 5.000 | 12.000 | 3.016 |

| 1/3.2" | 4:3 | 4.536 | 3.416 | 5.678 | 15.495 | 3.894 |

| 1/3" | 4:3 | 4.800 | 3.600 | 6.000 | 17.280 | 4.343 |

| 1/2.7" | 4:3 | 5.270 | 3.960 | 6.592 | 20.869 | 5.245 |

| 1/2" | 4:3 | 6.400 | 4.800 | 8.000 | 30.720 | 7.721 |

| 1/1.8" | 4:3 | 7.176 | 5.319 | 8.932 | 38.169 | 9.593 |

| 2/3" | 4:3 | 8.800 | 6.600 | 11.000 | 58.080 | 14.597 |

| 1" | 4:3 | 12.800 | 9.600 | 16.000 | 122.880 | 30.882 |

| 4/3" | 4:3 | 18.000 | 13.500 | 22.500 | 243.000 | 61.070 |

| Other image sizes as a comparison | ||||||

| APS-C | 3:2 | 25.100 | 16.700 | 30.148 | 419.170 | 105.346 |

| 35mm | 3:2 | 36.000 | 24.000 | 43.267 | 864.000 | 217.140 |

| 645 | 4:3 | 56.000 | 41.500 | 69.701 | 2324.000 | 584.066 |

See also

- Image sensor

- Photodiode

- CMOS sensor

- Bayer filter

- Electron multiplying CCD

- 3CCD

- Frame transfer CCD

- Rotating line camera

- Intensified charge-coupled device

- Superconducting camera

- Super CCD

- Wide dynamic range

- Foveon X3 sensor

- Hole Accumulation Diode (HAD)

- Camcorder

References

- ↑ James R. Janesick (2001). Scientific charge-coupled devices. SPIE Press. p. 4. ISBN 9780819436986. http://books.google.com/books?id=3GyE4SWytn4C&pg=PA3&dq=charge+bubble+device+boyle+smith&as_brr=3&ei=cbzjSrjlLZOilQSy7qjyCw#v=onepage&q=charge%20bubble%20device%20boyle%20smith&f=false.

- ↑ W. S. Boyle and G. E. Smith (April 1970). "Charge Coupled Semiconductor Devices". Bell Sys. Tech. J. 49 (4): 587–593.

- ↑ G. F. Amelio, M. F. Tompsett, and G. E. Smith (April 1970). "Experimental Verification of the Charge Coupled Device Concept". Bell Sys. Tech. J. 49 (4): 593–600.

- ↑ M. F. Tompsett, G. F. Amelio, and G. E. Smith (1 August 1970). "Charge Coupled 8-bit Shift Register". Applied Physics Lettersfrom 17: 111–115. doi:10.1063/1.1653327.

- ↑ Tompsett, M.F. Amelio, G.F. Bertram, W.J., Jr. Buckley, R.R. McNamara, W.J. Mikkelsen, J.C., Jr. Sealer, D.A. (November 1971). "Charge-coupled imaging devices: Experimental results". IEEE Transactions on Electron Devices 18 (11): 992–996. ISSN 0018-9383.

- ↑ Johnstone, B. (1999), We Were Burning: Japanese Entrepreneurs and the Forging of the Electronic Age, New York: Basic Books, ISBN 0465091172

- ↑ Charles Stark Draper Award, http://www.nae.edu/NAE/awardscom.nsf/weblinks/CGOZ-6K9L6P?OpenDocument

- ↑ Nobel Prize website, http://nobelprize.org/nobel_prizes/physics/laureates/2009/

- ↑ Hainaut, Oliver R. (December 2006). "Basic CCD image processing". http://www.sc.eso.org/~ohainaut/ccd. Retrieved October 7, 2009.

Hainaut, Oliver R. (June 1, 2005). "Signal, Noise and Detection". http://www.eso.org/~ohainaut/ccd/sn.html. Retrieved October 7, 2009.

Hainaut, Oliver R. (May 20, 2009). "Retouching of astronomical data for the production of outreach images". http://www.eso.org/~ohainaut/images/imageProc.html. Retrieved October 7, 2009.

(Hainaut is an astronomer at the European Southern Observatory)

External links

- Journal Article On Basics of CCDs

- Eastman Kodak Primer on CCDs

- Nikon microscopy introduction to CCDs

- Concepts in Digital Imaging Technology

- CCDs for Material Scientists

- CCD vs. CMOS technical comparison

- Micrograph of the photosensor array of a webcam.